- Scalene

- Posts

- Scalene 53: Collapse / decline / writing

Scalene 53: Collapse / decline / writing

Humans | AI | Peer review. The triangle is changing.

There is a subtle undercurrent to this week’s stories which suggest that human peer review is worth fighting for. No arguments there from me. For all its failings, the best ones are the gold standard. But there aren’t enough of them to handle the number of papers we are creating nowadays, and certainly not enough approaching the level of a gold standard. The question is no longer, how do we keep AI out of peer review, but rather, how do we use AI ethically to help evaluate papers where no qualified human is available? To paraphrase Ethan Mollick, AI only needs to be better than the best available human. And if the human reviewers are not available, what then?

2nd February 2026

1//

Preventing the Collapse of Peer Review Requires Verification-First AI

arXiv.org - 23 Jan 2026 - 23 min read

Training AI to write reviews like a human is a bad idea. It looks like progress, but it just speeds up the appearance of peer review without anyone actually checking whether the science is solid. The authors show that once there are more claims than humans can verify, researchers start gaming the system — no matter how prestigious the journal. The real answer could be deploying AI as an adversarial auditor that produces hard, checkable evidence.

I also enjoyed the final sentence of the Claude review, which states that “Readers in scholarly publishing operations tend to disengage from arguments that feel alarmist, even when the underlying logic is sound.”

https://arxiv.org/abs/2601.16909

Claude peer review: https://claude.ai/public/artifacts/06565155-9970-4f1d-98fb-8e1bd7ddfaaf

2//

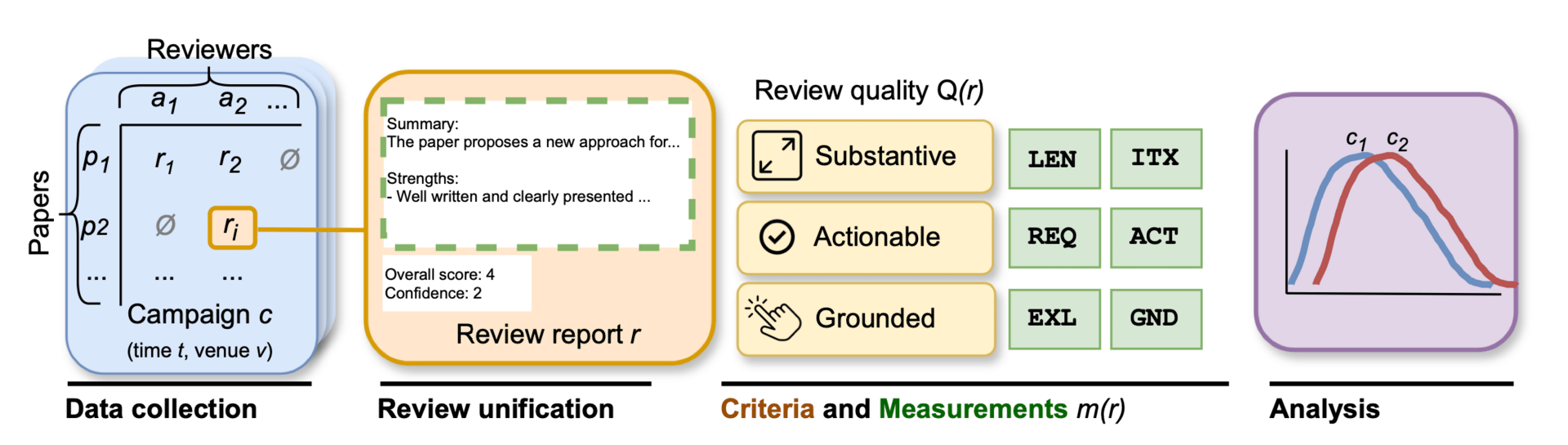

Is Peer Review Really in Decline? Analyzing Review Quality across Venues and Time

arXiv.org - 21 Jan 2026 - 26 min read

TL;DR: Not really. The authors here analysed thousands of reviews across ICLR, NeurIPS and ARR from 2018–2025 using six automated quality metrics — and found no consistent drop in median review quality over time. However, the authors argue that as submission volumes balloon, more individual researchers will inevitably encounter poor reviews — and that perception, amplified by social media, may be driving the narrative more than the data does. You should read the Claude review for a more nuanced insight.

https://arxiv.org/abs/2601.15172

Claude peer review: https://claude.ai/public/artifacts/d8c02518-32e9-479b-8a0a-283b971bcaf7

3//

If the economics make sense, should we pay peer reviewers?

Scholarly Futures - 27 Jan 2026 - 4 min read

Phil Garner reports on Becaris Publishing’s experiments with several initiatives, including paying peer reviewers. Aside from many indirect benefits (related to submissions, discoverability), paying peer reviewers seemed to result in higher response rates to invitations, more repeat reviewers, faster turnarounds, and slightly better review quality.

As the peer review ecosystem continues to strain under growing submission volumes, shrinking reviewer availability and the influence of AI, it may be time for more publishers to reassess longstanding assumptions about reviewer compensation

4//

What we lose when we outsource scientific writing

LSE Blog - 28 Jan 2026 - 6 min read

I consider myself a techno-optimist when it comes to the benefits I believe AI can bring to the peer review process (primarily shortening it, and removing some biases). However people are often surprised when I am not such a supporter of AI writing tools. This week’s launch of Prism by OpenAI meant this post (on the always excellent LSE blog) made me nod my head a lot. Writing is thinking.

Scientific writing isn’t the packaging around knowledge. It’s one of the key places of knowledge production itself. Crafting a story around data and turning lab practice into words is as much part of science as the experiments themselves. When we outsource it, streamline it, or split it off from lab work, we change what “doing science” means.

5//

Peer Review and AI: Your (Human) Opinion Is What Matters

ACS Nano - 22 Jan 2026 - 6 min read

This multi-authored editorial from a group of ACS editors is exactly what you might expect it to be. I highlight the two pull quotes to illustrate what I mean.

Peer review is a human responsibility that cannot be delegated to AI. Without you, curiosity-driven science is fundamentally imperiled.

When selecting reviewers for a manuscript, we as editors specifically chose you because of your expertise; we value your unique perspective and evaluation, not that of an LLM, indeed the same reason why you are selected and not someone else.

To be fair, it’s more a celebration of the strength of human review, but the arguments against the use of LLMs as assistants to human reviewers are somewhat dated and skewed to the alarmist. I would refer the authors to the Claude review comment in story 1.

And finally…

The next frontier for public access: embedding signals of trustworthiness - many thanks to Meagan Phelan for alerting me to this great insight into trust signals in academic research

CERCA – Citation Extraction & Reference Checking Assistant - an open-source research tool that supports verification of bibliographic references in scientific manuscripts.

GPTZero finds 100 new hallucinations in NeurIPS 2025 accepted papers - https://gptzero.me/news/neurips/

Science Is Drowning in AI Slop - https://www.theatlantic.com/science/2026/01/ai-slop-science-publishing/685704/?gift=GCS9qaPOKpDc-5uFpG_FM_Nii-0zokc-rZz1cpF1QN4

AI Tools Are Changing Academic Publishing. How Can We Adopt Them Responsibly? - https://katinamagazine.org/content/article/future-of-work/2026/ai-tools-are-changing-publishing-adopt-them-responsibly

Let’s chat

I’ll be at the R2R conference later this month - but always happy to chat, email, meet - just reply to this email.

Curated by me, Chris Leonard.

If you want to get in touch, please simply reply to this email.