- Scalene

- Posts

- Scalene 52: Governance / CEP / Wikipedia

Scalene 52: Governance / CEP / Wikipedia

Humans | AI | Peer review. The triangle is changing.

Lots to share this week, so minimal pontificating. And in any case the first story has the intro I’ve been wanting to read for some time…

20th January 2026

1//

Peer review needs a revolution. AI is already driving it

Scholarly Futures - 13 Jan 2026 - 5 min read

Imagine an article about peer review that didn’t follow the usual tropes. One that didn’t open with the quote that like democracy it is imperfect, but the best system we have. It wouldn’t close summarising that until a better system is found, we would do well to understand its strengths and weaknesses.

Ruth Francis with some delectable takes on AI and peer review - and the need for stronger governance where AI is used:

If peer review is to be fair and accountable, strong governance is needed, and fast. Researchers should know if AI is evaluating their work and the role it plays in decisions. Governance should address: who is responsible for AI errors; how authors can challenge AI-based decisions; and, what level of explanation must systems provide for their assessments?

2//

Why scholarly publishing needs a neutral governance body for the AI age

Research Information - 14 Jan 2026 - 4 min read

Later that same day, I come across this piece from Darrell Gunter on why checks and balances of a pre-AI era are no longer sufficient to maintain trust in academic publishing. Sometimes this newsletter just writes itself:

For decades, the scholarly publishing ecosystem has relied on a loose federation of publishers, libraries, and indexing services to manage trust. Retraction Watch, Crossref, PubMed, OpenAlex, and others have all done heroic work, but their authority is partial, unevenly implemented, and not designed for machine governance. Their signals are advisory rather than binding. Their metadata is often optional. And their integration into AI pipelines is inconsistent or nonexistent.

The result is a system in which every actor defines “truth,” “trust,” and “reliability” differently. That cannot work in an AI-driven research environment.

3//

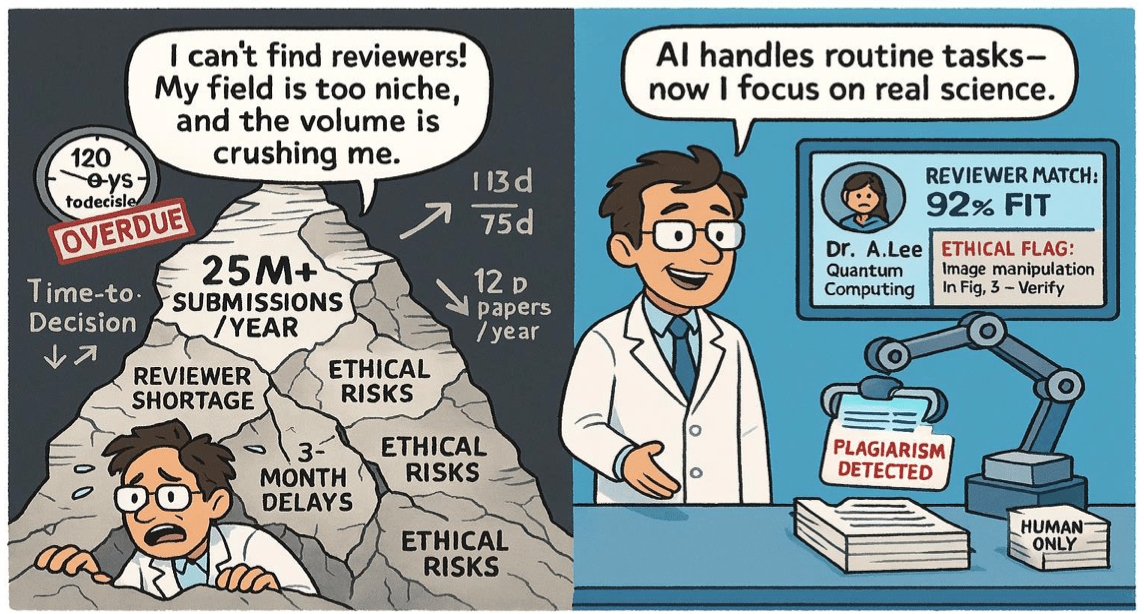

The Peer Review Crisis and the AI Revolution: Rethinking Scholarly Validation in the Age of Overload

Open Scope Publications - 19 Nov 2025 - 12 min read

I love this title and its cute little graphics, but it could have been even more strident, at least IMHO:

The crisis in peer review does not represent a system fail- ure, but rather reflects a model designed for a pre-digital era. Artificial intelligence does not replace reviewers but enables them to focus on the most intellectually relevant tasks. By automating repetitive processes, increasing pre- cision, and expanding participation, AI can help restore scientific evaluation’s credibility and accelerate knowl- edge advancement. The future of science does not belong exclusively to humans or machines, but to their strategic collaboration. In this new ecosystem, knowledge integrity will depend on hybrid systems capable of responding re- sponsibly to the challenges of the information age.

4//

Critically Engaged Pragmatism: A Scientific Norm and Social, Pragmatist Epistemology for AI Science Evaluation Tools

arXiv.org - 13 Jan 2026 - 33 min read

Science faces crises in peer review, replicability, and AI-fabricated research. These pressures increase interest in automated AI tools to evaluate scientific claims. The author argues scientific communities often misapply credibility markers when under pressure and proposes a social, pragmatist epistemology to guide critical use of these tools. She names this new norm Critically Engaged Pragmatism.

It’s a great, but lengthy read - maybe get ChatGPT to summarise it for you (!)

https://arxiv.org/abs/2601.09753

Claude review of the same: https://claude.ai/public/artifacts/f8aed855-0fc7-4de7-859a-c0c652349e32

5//

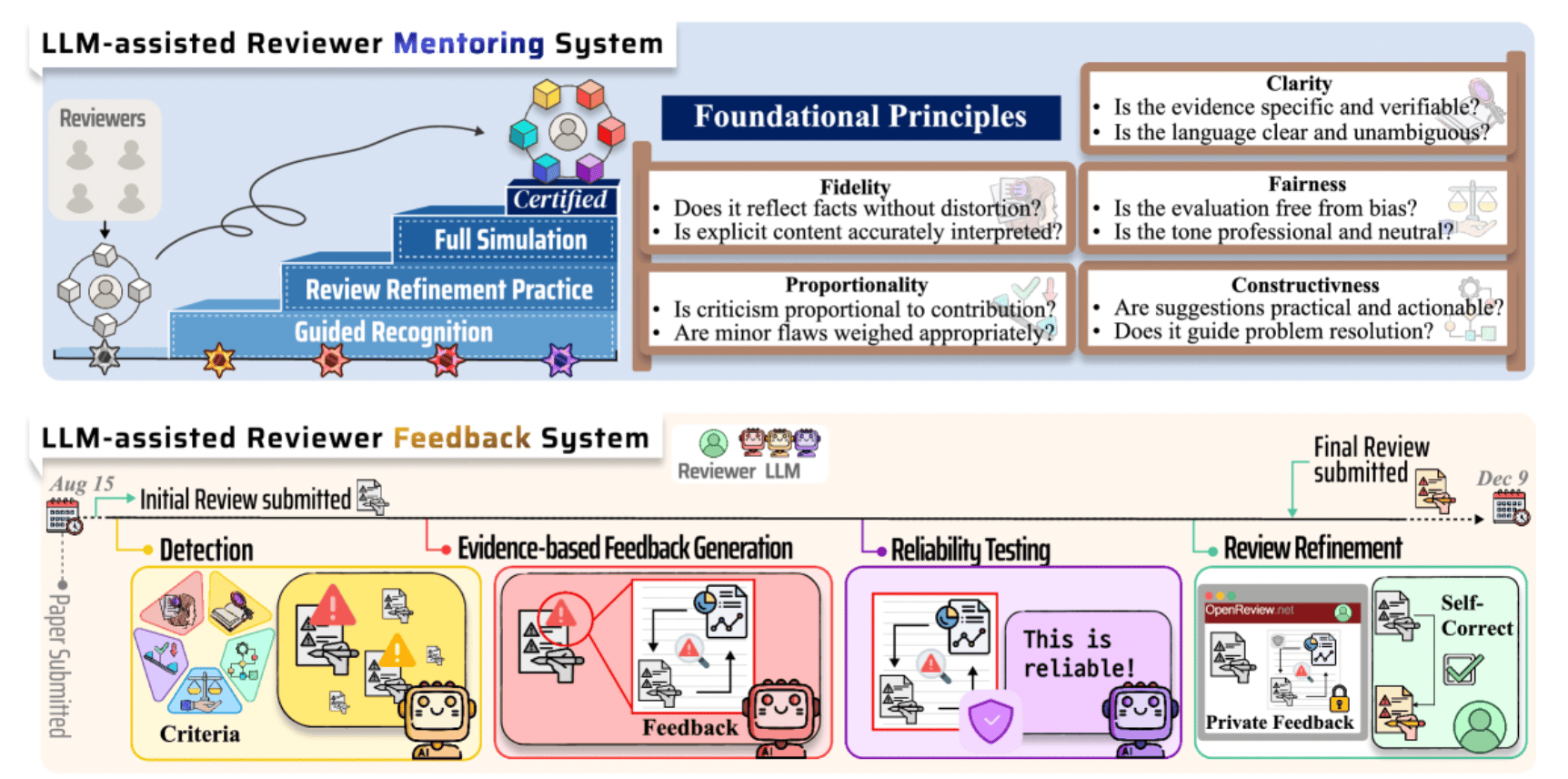

Position on LLM-Assisted Peer Review: Addressing Reviewer Gap through Mentoring and Feedback

arXiv.org - 14 Jan 2026 - 18 min read

The rapid expansion of AI research has intensified the Reviewer Gap, threatening the peer-review sustainability and perpetuating a cycle of low-quality evaluations. This position paper critiques existing LLM approaches that automatically generate reviews and argues for a paradigm shift that positions LLMs as tools for assisting and educating human reviewers. We define the core principles of high-quality peer review and propose two complementary systems grounded in these foundations: (i) an LLM-assisted mentoring system that cultivates reviewers’ long-term competencies, and (ii) an LLM-assisted feedback system that helps reviewers refine the quality of their reviews. This human-centered approach aims to strengthen reviewer expertise and contribute to building a more sustainable scholarly ecosystem.

https://arxiv.org/html/2601.09182v1

Claude review of the same: https://claude.ai/public/artifacts/0acb66d4-53c9-4848-9d54-6dc7951e4e60

And finally…

A bumper crop of links this week:

A Modest Proposal for the Peer Review Crisis: https://bsky.app/profile/pardoguerra.bsky.social/post/3mc3ykg7f7k2t

A new preprint server welcomes papers written and reviewed by AI - https://www.science.org/content/article/new-preprint-server-welcomes-papers-written-and-reviewed-ai

AI finds errors in 90% of Wikipedia's best articles - https://en.wikipedia.org/wiki/Wikipedia:Wikipedia_Signpost/2025-12-01/Opinion

A serious problem’: peer reviews created using AI can avoid detection - https://www.nature.com/articles/d41586-025-04032-1

Invisible Text Injection and Peer Review by AI Models - https://jamanetwork.com/journals/jamanetworkopen/fullarticle/2844042

Scientific Production In The Era Of Large Language Models: With The Production Process Rapidly Evolving, Science Policy Must Consider How Institutions Could Evolve - DOI: 10.1126/science.adw3000

The 5 stages of the ‘enshittification’ of academic publishing - https://theconversation.com/the-5-stages-of-the-enshittification-of-academic-publishing-269714?utm_medium=article_native_share

From model collapse to citation collapse: risks of over-reliance on AI in the academy: https://www.timeshighereducation.com/campus/model-collapse-citation-collapse-risks-overreliance-ai-academy

Let’s chat

No specific conferences planned anytime soon - but always happy to chat, email, meet - just reply to this email.

Curated by me, Chris Leonard.

If you want to get in touch, please simply reply to this email.